|

Email: santosh[at]cyrionlabs[dot]org Google Scholar Github Resume LinkedIn I am currently a research intern at the University of Washington, and a Machine Learning Researcher and Co-Founder at Cyrion Labs. My work focuses on human-computer interaction, multimodal learning, visual-language models, and the semantic web. In industry, I have worked on developing and scaling AI technology in roles ranging from Machine Learning Engineer to Full-Stack Developer in startups and established companies. |

|

|

|

|

April 2025: One Paper (A General-Purpose Framework for Real-Time Adaptive Multimodal Embodied Conversational Agents) Accepted to Show & Tell @ Interspeech April 2025: One Paper (PhysNav-DG: A Novel Adaptive Framework for Robust VLM-Sensor Fusion in Navigation Applications) Accepted to DG-EBF @ IEEE CVPR 2025 March 2025: Second Demo (VIZ: Virtual & Physical Navigation System for the Visually Impaired) Accepted to IEEE CVPR 2025 as a Demo March 2025: One Demo (GenECA: A Generalizable Framework for Real-Time Multimodal Embodied Conversational Agents) Accepted to IEEE CVPR 2025 as a Demo March 2025: One Preprint Paper Posted on arXiv October 2024: Second Oral Presentation at IEEE MIT URTC October 2024: First Poster Presentation at IEEE MIT URTC September 2024: Poster Presentation at the Rice Ken Kennedy AI in Health Conference August 2024: Research featured on The AI Timeline July 2024: First Preprint Paper Posted on arXiv June 2024: Joined as a research assistant at the SMU BAST Lab May 2024: Began an internship on Nanotech x AI at the UW Department of Electrical & Computer Engineering April 2024: First Poster Presentation at The Future of Biology Conference |

|

|

|

Trisanth Srinivasan, Santosh Patapati CVPR 2025 Demo [Poster] Addresses the challenges faced by the visually impaired by utilizing generative AI to mimic human behavior for complex digital tasks and physical navigation. |

|

|

Santosh Patapati, Trisanth Srinivasan CVPR 2025 Demo [Video] Introduces a robust framework for multimodal interactions with embodied conversational agents, emphasizing emotion-sensitive interaction. |

|

|

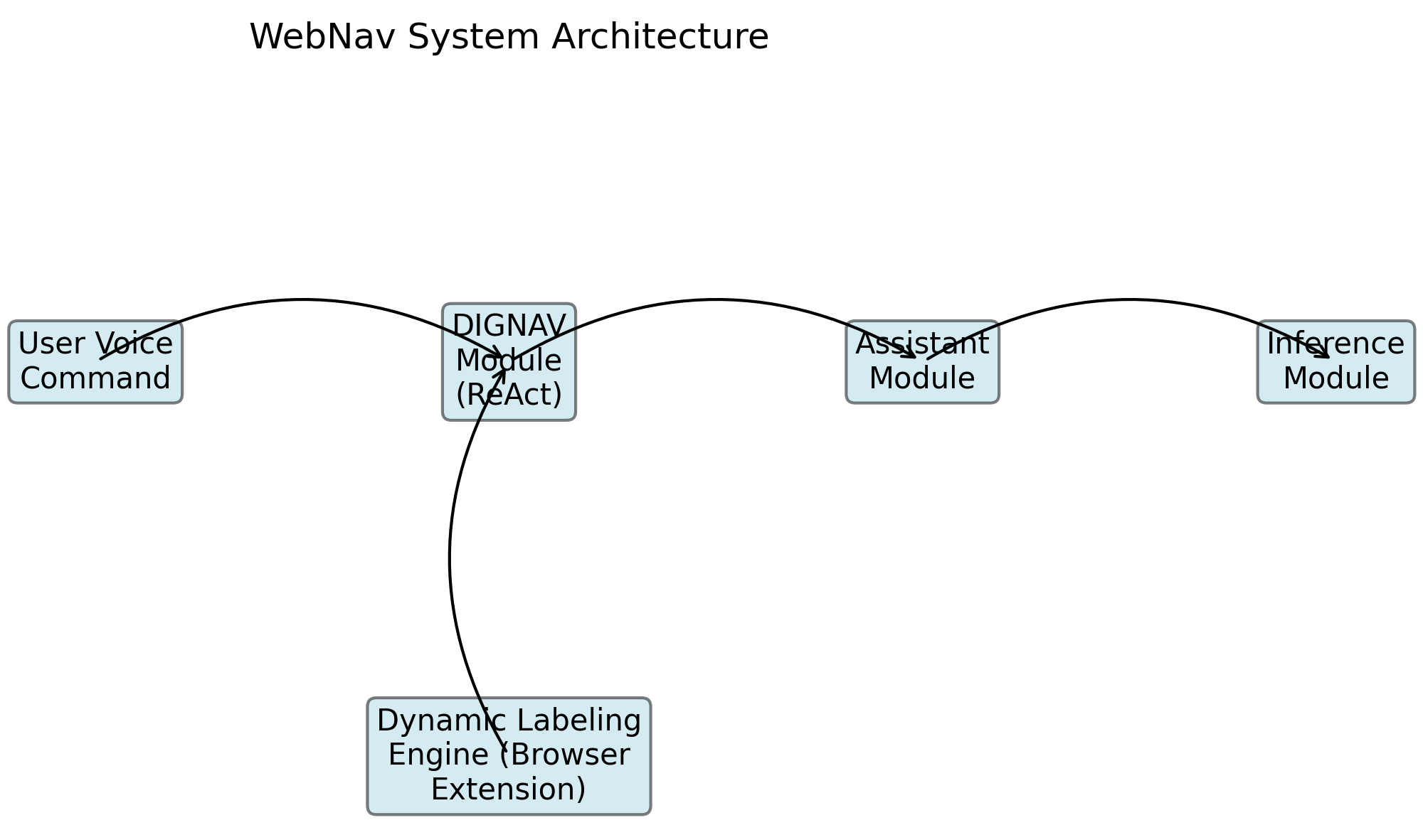

Trisanth Srinivasan, Santosh Patapati Preprint, arXiv:2503.13843, Pending Publication, 2025 [PDF] Presents a novel voice-controlled navigation agent using a ReAct-inspired architecture, offering improved accessibility for the visually impaired. |

|

|

Santosh Patapati Preprint, arXiv:2407.19340, Pending Publication, 2024 [PDF] Presents a novel multimodal framework for automated MDD prognosis from clinical interview recordings. Proposes pipeline for real-world clinican use. |

|

The website template was adapted from Jon Barron. |